Computer scientists established a new boundary on computationally verifiable knowledge. In doing so, they solved major open problems in quantum mechanics and pure mathematics.

In 1935, Albert Einstein, working with Boris Podolsky and Nathan Rosen, grappled with a possibility revealed by the new laws of quantum physics: that two particles could be entangled, or correlated, even across vast distances.

The very next year, Alan Turing formulated the first general theory of computing and proved that there exists a problem that computers will never be able to solve.

These two ideas revolutionized their respective disciplines. They also seemed to have nothing to do with each other. But now a landmark proof has combined them while solving a raft of open problems in computer science, physics and mathematics.

The new proof establishes that quantum computers that calculate with entangled quantum bits or qubits, rather than classical 1s and 0s, can theoretically be used to verify answers to an incredibly vast set of problems. The correspondence between entanglement and computing came as a jolt to many researchers.

“It was a complete surprise,” said Miguel Navascués, who studies quantum physics at the Institute for Quantum Optics and Quantum Information in Vienna.

The proof’s co-authors set out to determine the limits of an approach to verifying answers to computational problems. That approach involves entanglement. By finding that limit the researchers ended up settling two other questions almost as a byproduct: Tsirelson’s problem in physics, about how to mathematically model entanglement, and a related problem in pure mathematics called the Connes embedding conjecture.

In the end, the results cascaded like dominoes.

“The ideas all came from the same time. It’s neat that they come back together again in this dramatic way,” said Henry Yuen of the University of Toronto and an author of the proof, along with Zhengfeng Ji of the University of Technology Sydney, Anand Natarajan and Thomas Vidick of the California Institute of Technology, and John Wright of the University of Texas, Austin. The five researchers are all computer scientists.

Undecidable Problems

Turing defined a basic framework for thinking about computation before computers really existed. In nearly the same breath, he showed that there was a certain problem computers were provably incapable of addressing. It has to do with whether a program ever stops.

Typically, computer programs receive inputs and produce outputs. But sometimes they get stuck in infinite loops and spin their wheels forever. When that happens at home, there’s only one thing left to do.

“You have to manually kill the program. Just cut it off,” Yuen said.

Turing proved that there’s no all-purpose algorithm that can determine whether a computer program will halt or run forever. You have to run the program to find out.

(Yuen) Andrea Lao; (Vidick) Courtesy of Caltech; (Ji) Anna Zhu; (Natarajan) David Sella; (Wright) Soya Park

“You’ve waited a million years and a program hasn’t halted. Do you just need to wait 2 million years? There’s no way of telling,” said William Slofstra, a mathematician at the University of Waterloo.

In technical terms, Turing proved that this halting problem is undecidable — even the most powerful computer imaginable couldn’t solve it.

After Turing, computer scientists began to classify other problems by their difficulty. Harder problems require more computational resources to solve — more running time, more memory. This is the study of computational complexity.

Ultimately, every problem presents two big questions: “How hard is it to solve?” and “How hard is it to verify that an answer is correct?”

Interrogate to Verify

When problems are relatively simple, you can check the answer yourself. But when they get more complicated, even checking an answer can be an overwhelming task. However, in 1985 computer scientists realized it’s possible to develop confidence that an answer is correct even when you can’t confirm it yourself.

The method follows the logic of a police interrogation.

If a suspect tells an elaborate story, maybe you can’t go out into the world to confirm every detail. But by asking the right questions, you can catch your suspect in a lie or develop confidence that the story checks out.

In computer science terms, the two parties in an interrogation are a powerful computer that proposes a solution to a problem — known as the prover — and a less powerful computer that wants to ask the prover questions to determine whether the answer is correct. This second computer is called the verifier.

To take a simple example, imagine you’re colorblind and someone else — the prover — claims two marbles are different colors. You can’t check this claim by yourself, but through clever interrogation you can still determine whether it’s true.

Put the two marbles behind your back and mix them up. Then ask the prover to tell you which is which. If they really are different colors, the prover should answer the question correctly every time. If the marbles are actually the same color — meaning they look identical — the prover will guess wrong half the time.

“If I see you succeed a lot more than half the time, I’m pretty sure they’re not” the same color, Vidick said.

By asking a prover questions, you can verify solutions to a wider class of problems than you can on your own.

In 1988, computer scientists considered what happens when two provers propose solutions to the same problem. After all, if you have two suspects to interrogate, it’s even easier to solve a crime, or verify a solution, since you can play them against each other.

“It gives more leverage to the verifier. You interrogate, ask related questions, cross-check the answers,” Vidick said. If the suspects are telling the truth, their responses should align most of the time. If they’re lying, the answers will conflict more often.

Similarly, researchers showed that by interrogating two provers separately about their answers, you can quickly verify solutions to an even larger class of problems than you can when you only have one prover to interrogate.

Computational complexity may seem entirely theoretical, but it’s also closely connected to the real world. The resources that computers need to solve and verify problems — time and memory — are fundamentally physical. For this reason, new discoveries in physics can change computational complexity.

“If you choose a different set of physics, like quantum rather than classical, you get a different complexity theory out of it,” Natarajan said.

The new proof is the end result of 21st-century computer scientists confronting one of the strangest ideas of 20th-century physics: entanglement.

The Connes Embedding Conjecture

When two particles are entangled, they don’t actually affect each other — they have no causal relationship. Einstein and his co-authors elaborated on this idea in their 1935 paper. Afterward, physicists and mathematicians tried to come up with a mathematical way of describing what entanglement really meant.

Yet the effort came out a little muddled. Scientists came up with two different mathematical models for entanglement — and it wasn’t clear that they were equivalent to each other.

In a roundabout way, this potential dissonance ended up producing an important problem in pure mathematics called the Connes embedding conjecture. Eventually, it also served as a fissure that the five computer scientists took advantage of in their new proof.

The first way of modeling entanglement was to think of the particles as spatially isolated from each other. One is on Earth, say, and the other is on Mars; the distance between them is what prevents causality. This is called the tensor product model.

But in some situations, it’s not entirely obvious when two things are causally separate from each other. So mathematicians came up with a second, more general way of describing causal independence.

When the order in which you perform two operations doesn’t affect the outcome, the operations “commute”: 3 x 2 is the same as 2 x 3. In this second model, particles are entangled when their properties are correlated but the order in which you perform your measurements doesn’t matter: Measure particle A to predict the momentum of particle B or vice versa. Either way, you get the same answer. This is called the commuting operator model of entanglement.

Both descriptions of entanglement use arrays of numbers organized into rows and columns called matrices. The tensor product model uses matrices with a finite number of rows and columns. The commuting operator model uses a more general object that functions like a matrix with an infinite number of rows and columns.

Over time, mathematicians began to study these matrices as objects of interest in their own right, completely apart from any connection to the physical world. As part of this work, a mathematician named Alain Connes conjectured in 1976 that it should be possible to approximate many infinite-dimensional matrices with finite-dimensional ones. This is one implication of the Connes embedding conjecture.

The following decade a physicist named Boris Tsirelson posed a version of the problem that grounded it in physics once more. Tsirelson conjectured that the tensor product and commuting operator models of entanglement were roughly equivalent. This makes sense, since they’re theoretically two different ways of describing the same physical phenomenon. Subsequent work showed that because of the connection between matrices and the physical models that use them, the Connes embedding conjecture and Tsirelson’s problem imply each other: Solve one, and you solve the other.

Yet the solution to both problems ended up coming from a third place altogether.

Game Show Physics

In the 1960s, a physicist named John Bell came up with a test for determining whether entanglement was a real physical phenomenon, rather than just a theoretical notion. The test involved a kind of game whose outcome reveals whether something more than ordinary, non-quantum physics is at work.

Computer scientists would later realize that this test about entanglement could also be used as a tool for verifying answers to very complicated problems.

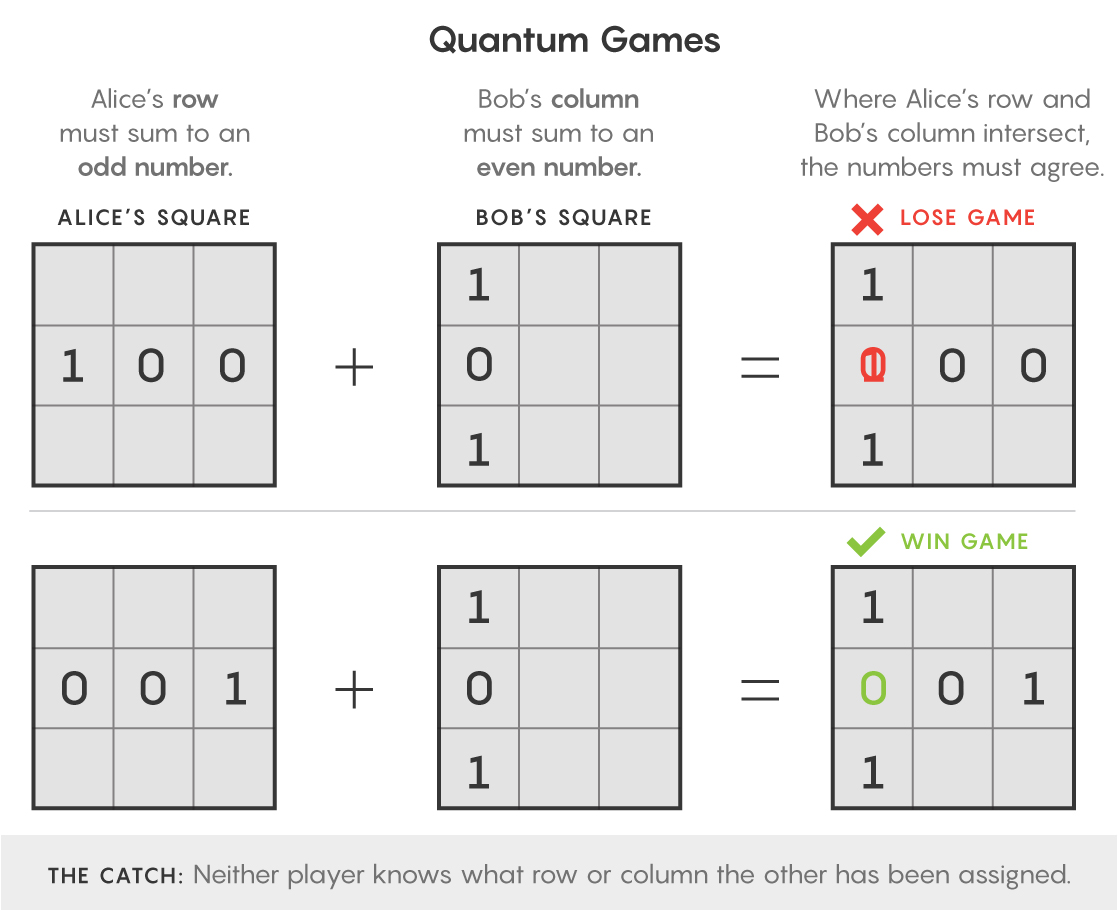

But first, to see how the games work, let’s imagine two players, Alice and Bob, and a 3-by-3 grid. A referee assigns Alice a row and tells her to enter a 0 or a 1 in each box so that the digits sum to an odd number. Bob gets a column and has to fill it out so that it sums to an even number. They win if they put the same number in the one place her row and his column overlap. They’re not allowed to communicate.

Under normal circumstances, the best they can do is win 89% of the time. But under quantum circumstances, they can do better.

Imagine Alice and Bob split a pair of entangled particles. They perform measurements on their respective particles and use the results to dictate whether to write 1 or 0 in each box. Because the particles are entangled, the results of their measurements are going to be correlated, which means their answers will correlate as well — meaning they can win the game 100% of the time.

So if you see two players winning the game at unexpectedly high rates, you can conclude that they are using something other than classical physics to their advantage. Such Bell-type experiments are now called “nonlocal” games, in reference to the separation between the players. Physicists actually perform them in laboratories.

“People have run experiments over the years that really show this spooky thing is real,” said Yuen.

As when analyzing any game, you might want to know how often players can win a nonlocal game, provided they play the best they can. For example, with solitaire, you can calculate how often someone playing perfectly is likely to win.

But in 2016, William Slofstra proved that there’s no general algorithm for calculating the exact maximum winning probability for all nonlocal games. So researchers wondered: Could you at least approximate the maximum-winning percentage?

Computer scientists have homed in on an answer using the two models describing entanglement. An algorithm that uses the tensor product model establishes a floor, or minimum value, on the approximate maximum-winning probability for all nonlocal games. Another algorithm, which uses the commuting operator model, establishes a ceiling.

These algorithms produce more precise answers the longer they run. If Tsirelson’s prediction is true, and the two models really are equivalent, the floor and the ceiling should keep pinching closer together, narrowing in on a single value for the approximate maximum-winning percentage.

But if Tsirelson’s prediction is false, and the two models are not equivalent, “the ceiling and the floor will forever stay separated,” Yuen said. There will be no way to calculate even an approximate winning percentage for nonlocal games.

In their new work, the five researchers used this question — about whether the ceiling and floor converge and Tsirelson’s problem is true or false — to solve a separate question about when it’s possible to verify the answer to a computational problem.

Entangled Assistance

In the early 2000s, computer scientists began to wonder: How does it change the range of problems you can verify if you interrogate two provers that share entangled particles?

Most assumed that entanglement worked against verification. After all, two suspects would have an easier time telling a consistent lie if they had some means of coordinating their answers.

But over the last few years, computer scientists have realized that the opposite is true: By interrogating provers that share entangled particles, you can verify a much larger class of problems than you can without entanglement.

“Entanglement is a way to generate correlations that you think might help them lie or cheat,” Vidick said. “But in fact you can use that to your advantage.”

To understand how, you first need to grasp the almost otherworldly scale of the problems whose solutions you could verify through this interactive procedure.

Imagine a graph — a collection of dots (vertices) connected by lines (edges). You might want to know whether it’s possible to color the vertices using three colors, so that no vertices connected by an edge have the same color. If you can, the graph is “three-colorable.”

If you hand a pair of entangled provers a very large graph, and they report back that it can be three-colored, you’ll wonder: Is there a way to verify their answer?

For very big graphs, it would be impossible to check the work directly. So instead, you could ask each prover to tell you the color of one of two connected vertices. If they each report a different color, and they keep doing so every time you ask, you’ll gain confidence that the three-coloring really works.

But even this interrogation strategy fails as graphs get really big — with more edges and vertices than there are atoms in the universe. Even the task of stating a specific question (“Tell me the color of XYZ vertex”) is more than you, the verifier, can manage: The amount of data required to name a specific vertex is more than you can hold in your working memory.

But entanglement makes it possible for the provers to come up with the questions themselves.

“The verifier doesn’t have to compute the questions. The verifier forces the provers to compute the questions for them,” Wright said.

The verifier wants the provers to report the colors of connected vertices. If the vertices aren’t connected, then the answers to the questions won’t say anything about whether the graph is three-colored. In other words, the verifier wants the provers to ask correlated questions: One prover asks about vertex ABC and the other asks about vertex XYZ. The hope is that the two vertices are connected to each other, even though neither prover knows which vertex the other is thinking about. (Just as Alice and Bob hope to fill in the same number in the same square even though neither knows which row or column the other has been asked about.)

If two provers were coming up with these questions completely on their own, there’d be no way to force them to select connected, or correlated, vertices in a way that would allow the verifier to validate their answers. But such correlation is exactly what entanglement enables.

“We’re going to use entanglement to offload almost everything onto the provers. We make them select questions by themselves,” Vidick said.

At the end of this procedure, the provers each report a color. The verifier checks whether they’re the same or not. If the graph really is three-colorable, the provers should never report the same color.

“If there is a three-coloring, the provers will be able to convince you there is one,” Yuen said.

As it turns out, this verification procedure is another example of a nonlocal game. The provers “win” if they convince you their solution is correct.

In 2012, Vidick and Tsuyoshi Ito proved that it’s possible to play a wide variety of nonlocal games with entangled provers to verify answers to at least the same number of problems you can verify by interrogating two classical computers. That is, using entangled provers doesn’t work against verification. And last year, Natarajan and Wright proved that interacting with entangled provers actually expands the class of problems that can be verified.

But computer scientists didn’t know the full range of problems that can be verified in this way. Until now.

A Cascade of Consequences

In their new paper, the five computer scientists prove that interrogating entangled provers makes it possible to verify answers to unsolvable problems, including the halting problem.

“The verification capability of this type of model is really mind-boggling,” Yuen said.

But the halting problem can’t be solved. And that fact is the spark that sets the final proof in motion.

Imagine you hand a program to a pair of entangled provers. You ask them to tell you whether it will halt. You’re prepared to verify their answer through a kind of nonlocal game: The provers generate questions and “win” based on the coordination between their answers.

If the program does in fact halt, the provers should be able to win this game 100% of the time — similar to how if a graph is actually three-colorable, entangled provers should never report the same color for two connected vertices. If it doesn’t halt, the provers should only win by chance — 50% of the time.

That means if someone asks you to determine the approximate maximum-winning probability for a specific instance of this nonlocal game, you will first need to solve the halting problem. And solving the halting problem is impossible. Which means that calculating the approximate maximum-winning probability for nonlocal games is undecidable, just like the halting problem.

This in turn means that the answer to Tsirelson’s problem is no — the two models of entanglement are not equivalent. Because if they were, you could pinch the floor and the ceiling together to calculate an approximate maximum-winning probability.

“There cannot be such an algorithm, so the two [models] must be different,” said David Pérez-García of the Complutense University of Madrid.

The new paper proves that the class of problems that can be verified through interactions with entangled quantum provers, a class called MIP*, is exactly equal to the class of problems that are no harder than the halting problem, a class called RE. The title of the paper states it succinctly: “MIP* = RE.”

In the course of proving that the two complexity classes are equal, the computer scientists proved that Tsirelson’s problem is false, which, due to previous work, meant that the Connes embedding conjecture is also false.

For researchers in these fields, it was stunning that answers to such big problems would fall out from a seemingly unrelated proof in computer science.

“If I see a paper that says MIP* = RE, I don’t think it has anything to do with my work,” said Navascués, who co-authored previous work tying Tsirelson’s problem and the Connes embedding conjecture together. “For me it was a complete surprise.”

Quantum physicists and mathematicians are just beginning to digest the proof. Prior to the new work, mathematicians had wondered whether they could get away with approximating infinite-dimensional matrices by using large finite-dimensional ones instead. Now, because the Connes embedding conjecture is false, they know they can’t.

“Their result implies that’s impossible,” said Slofstra.

The computer scientists themselves did not aim to answer the Connes embedding conjecture, and as a result, they’re not in the best position to explain the implications of one of the problems they ended up solving.

“Personally, I’m not a mathematician. I don’t understand the original formulation of the Connes embedding conjecture well,” said Natarajan.

He and his co-authors anticipate that mathematicians will translate this new result into the language of their own field. In a blog post announcing the proof, Vidick wrote, “I don’t doubt that eventually complexity theory will not be needed to obtain the purely mathematical consequences.”

Yet as other researchers run with the proof, the line of inquiry that prompted it is coming to a halt. For more than three decades, computer scientists have been trying to figure out just how far interactive verification will take them. They are now confronted with the answer, in the form of a long paper with a simple title and echoes of Turing.

“There’s this long sequence of works just wondering how powerful” a verification procedure with two entangled quantum provers can be, Natarajan said. “Now we know how powerful it is. That story is at an end.”

This article was first published on Quanta Magazine